Why Is The World Afraid Of AI? The Fears Are Unfounded, And Here’s Why.

This week we saw another flurry of panic about AI. Elon Musk and a number of senior researchers wrote a letter asking the world to “slow down” for six months before advancing GPT4 (published by The Future of Life Institute). Goldman Sachs published a note that claims 300 million jobs will be affected by Generative AI and 7% of jobs could be eliminated. And a team of academics at Penn authored a study that claims 87% of jobs will be heavily impacted by AI.

Along with this noise come articles in the New York Times, Washington Post, and other journals painting pictures of AI machines running amok, ruining the political system, and eventually turning into “self-planning machines” that take power and disrupt the world.

And thanks to all this press, a recent survey shows that only 9% of average citizens believe AI will do more good than harm for society.

Let me make an argument that these concerns are warranted. While there are unpredictable outcomes with any new technology (we got carpel-tunnel syndrome from our keyboards, “fake news” from Twitter, and child mental health problems from social media), AI itself is not evil, “out of control,” or necessarily dangerous. What is dangerous is how we decide to use it.

Let me discuss the five fears I hear:

1/ AI is going to eliminate jobs, create unemployment, and disrupt the global workforce.

Not true. I heard this story during the mid 2000s when The University of Oxford published reports that 47% of jobs would be eliminated by automation. At that point in time The Economist, McKinsey, and others predicted that computers would wipe out jobs in retail, food service, accounting, banking, and finance. And we saw charts and graphs which detailed which tasks, jobs, and careers would be eliminated.

|

Now, looking back, it’s not clear that any of this occurred. We have the lowest unemployment rate in 55 years, demand for front-line workers is at an all-time high, and the need for manufacturing, process, logistics, and tech workers is still unfilled.

Yes, the world continues to have issues with middle-class wages, and just this week Howard Schultz, the chairman and founder of Starbucks, was punishingly berated by a congressional committee about the company’s threats against labor unions. But the problem was not caused by automation: Starbucks employees are suffering from overwork, scheduling difficulties, and continuous stress from ever more demanding customers. Starbucks actually would benefit from more AI: it would make it easier to plan and schedule complex drink orders.

I gave a speech on this topic in 2018 at a Singularity conference in San Francisco and the argument I made then (I suggest it’s even more true now) is that economic data shows that every new technology wave creates new jobs, careers, and business opportunities. While researchers think “AI is different,” I already see thousands of new jobs for “prompt engineers” and “GPT4 trainers” and “integration specialists” (more than 2,000 jobs are asking for GPT or generative AI skills right now). And every vendor I talk with tells me AI will be our Copilot or our Assistant, not the “robot that takes away our job.”

Do you believe the “autocomplete” feature on your phone or email system took away your job? That feature is built on AI. Did your noise-cancelling headphones take jobs away? They’re powered by neural networks.

While there will be new skills, tools, and technologies to learn, the age of AI will unleash one of the biggest new markets for talent we have seen in years. And as I’ve discussed with many of you, only about 6% of jobs in the world are “engineers building technology” – the majority of us will integrate, use, and consult with these tools. If anything I think AI will create a massive demand for new skills and people who can manage, train, and support these systems.

And by the way, since AI is largely driven by mathematics, I bet we see an uptick in math majors and general interest in statistics, linear programming, and algebra.

2/ AI will accelerate income inequality, poverty, and homelessness.

Second fear: many sources cited in the Future of Life article blame technology for social unrest, mental health issues, and economic inequality. And this idea, that new technologies “hollow out” the middle class and create undemocratic access to the rich, continues to drive many fears.

The reality is that the opposite is true. Let’s look at the data. For every new technology that’s invented (the computer, website, cloud, mobile, AI), we create a new industry of jobs. Jobs in user interface design, full-stack engineering, and front-end software are exploding. And today, thanks to bootcamps and community college education, anyone with a high school degree can become a computer programmer.

Consider this: in 2022 the Tech Bootcamp market (teaching people to code) was over $420 Million and one study found that 4% of non-tech workers have now learned to code. These technologies are creating a huge new career opportunity for underpaid workers, and AI will do the same.

Companies are now funding this upskilling. Walmart invests in career upskilling through its Career Pathways program, paying employees to finish college, get certificates, and move to new roles. Amazon, Tyson Foods, and even Waste Management have similar programs. And as Sunder Pichai, CEO of Google discusses in his latest podcast, AI makes it easier than ever to learn to code. So it’s clear to me that many new entry-level careers will be created around AI.

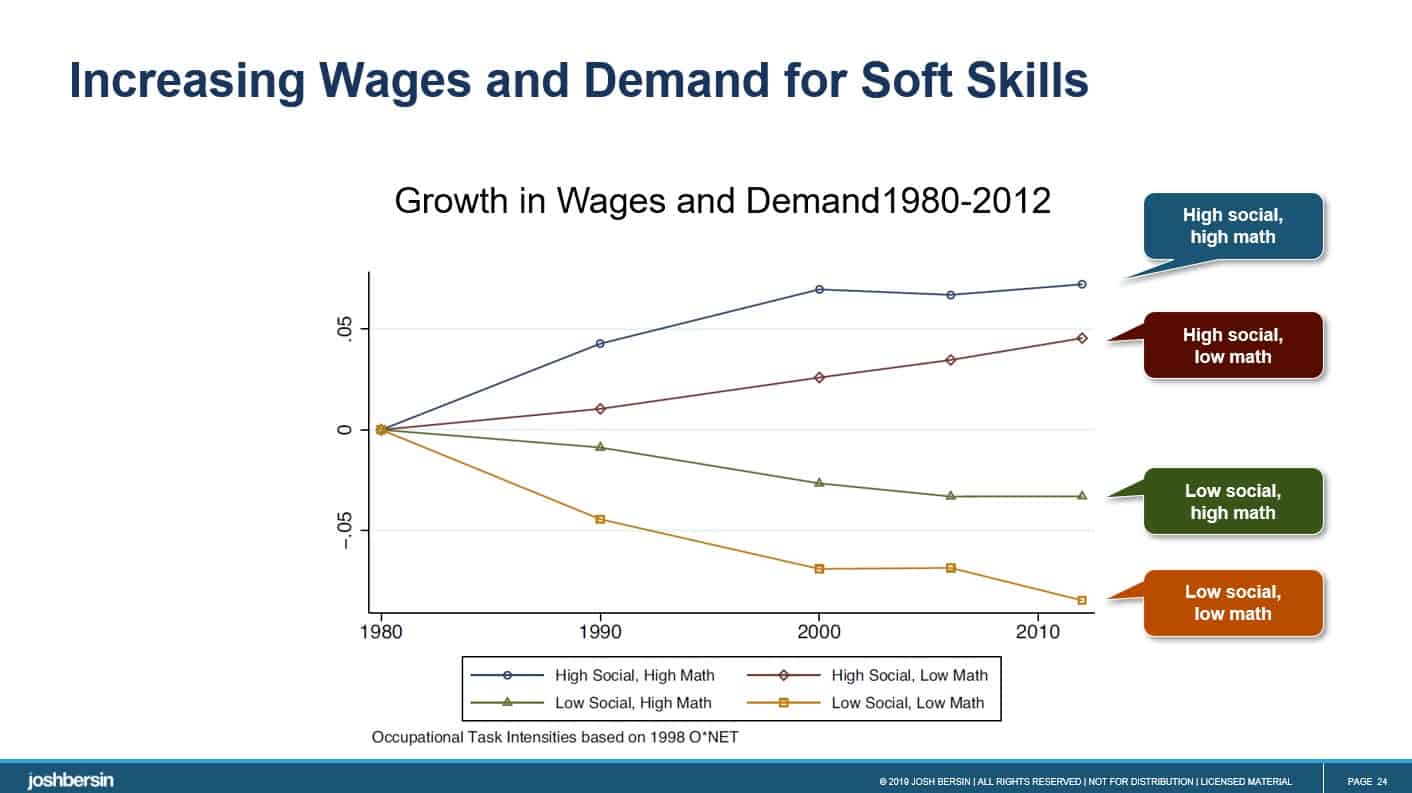

As far as wages, there will be a high demand for AI skills, but only for a while. While rarified computer scientists demand high salaries, over time the highest paid professionals combine technical and softskills (what we call PowerSkills). Many years ago I discovered an academic study that plotted wage growth by occupational cluster, grouping jobs into four categories based on “social skills” vs. “math skills.”

|

What you see is that over this 22 year period, workers with “social skills” (ie. management, leadership, communication, sales, time-management, etc.) far outearn the “techies” who focus on math (or science and engineering) alone. Why is this? Technical skills decay in value at a rapid rate. If you, as an engineer, are not quickly learning, working with other people, and applying your work (ie. becoming a product manager), you do tend to fall behind.

I’m not diminishing the value of technical careers (I am an engineer myself). I’m simply observing that no technology (even AI) has ever changed the need for managers, leaders, sales people, designers, analysts, and financial professionals in business. These jobs, which require an “understanding” of AI (not the ability to develop it), will continue to grow in importance and AI will simply make these jobs easier, more interesting, and more important.

There are many technical professionals who started careers as database analysts, moved to big data analytics, and possibly became data scientists. These people can now learn AI and add value in new ways. The managers and leaders around them will continue to thrive, all empowered and further enabled by AI. So in many ways AI is an enabler of economic growth, once we get over this “freaked out” period of worry we all seem to have entered.

3/ AI will create misinformation, cyber warfare, amplified bias, and eventually massive system destruction.

The third issue to debate is the “irresponsible use” of AI. People worry that AI systems will result in unfair incarceration, spam and misinformation, cyber-security catastrophes, and eventually a “smart and planning” AI that will take over power plants, information systems, hospitals, and other institutions.

There’s no question that neural networks have bias. They are trained with limited information so if they index lies or bias (i.e. they analyze careers and decide that white males are more likely to succeed than African American females), they will give us misleading advice. So these systems could “accelerate” problems and contribute to nefarious behavior.

But let me remind you that Facebook, Twitter, and other social networks do this today. All “open communications systems” lead to abuse and misbehavior, and in some ways Generative AI can clean this up. In only a few weeks, for example, Microsoft dramatically improved the behavior of Bing, and now cites sources for any authoritative statement. Google Bard, which is still in experimental mode, is undergoing rigorous testing by Google and is heavily supported by safety tools in Google Search. (And much of the work to fix Facebook in Twitter uses AI to spot and eliminate hate speech and dangerous photos.)

Any AI product used for HR, whether it comes from Microsoft or your HR technology vendor, will be subject to legal and regulatory rules. New York and other jurisdictions have announced fines and criminal penalties for products that show bias in selection, pay, or other HR matters. And companies like Microsoft and Google are already worried about IP ownership and other legal risks. These laws exist today, so any provider or vendor will have many reasons to comply.

Most of the HR vendors I talk with are very focused on this issue. They have “responsible AI” mandates, they are building and releasing explainability features, and they are testing their LLMs (Large Language Models) on safer and safer data sets. And if you, as a buyer, find that the system is biased or flawed, you simply will not use it or pay for it.

As for bias, I would suggest that humans are the most biased of all. A senior engineer at Google told me that their HR department studied hiring in detail and found that AI-based hiring (using data and neural networks to help select candidates) proved to be far less biased than human interviews. While AI is certainly not perfect, we as humans are pretty biased already.

On the topic of information security and warfare we have to assume that AI-enabled nefarious behaviors are already happening. There’s nothing to keep a cyber criminal from hiring a software engineer and building an AI model today (I would guess they do). So the answer is not to put the genie back into the bottle but rather to create an industry of tools, regulations, and monitoring systems to combat these actors.

4/ AI will create a sentient, evil “general AI” engine that could wipe out humanity.

Then there’s the biggest fear of all: AI is going to “run amok.” And of course movies like Ex Machina certainly give us pause.

One of the academic papers cited predicts “power-seeking AI” and concludes that there is a 5% chance that AI will destroy humanity by 2070. I read it in detail and remained very unconvinced.

While I’m not a mathematician, I did study statistics and linear algebra in college. I have yet to be convinced that neural networks and cost-minimization functions will spring to life and decide they want to destroy human beings. Yes, the decisions these systems make are hard to understand. But as we focus our efforts on “explainability” and bias, I believe these systems will become more transparent.

I suggest this: every technology ever invented has been used for evil purposes. Email is used for identity theft and the distribution of viruses and spyware. Mobile phones are filled with spam and can be used for surveillance. Despite a large industry of cyber-security tools, hospitals and governments continue to deal with ransomware.

Even Robert Oppenheimer, who invented the atomic bomb, believed nuclear science would be used for good. And today the benefits include NMR scanning, cancer treatment, and even smoke detectors.

If you are a scientist you believe that it is good to find out how the world works; that it is good to find out what the realities are; that it is good to turn over to mankind at large the greatest possible power to control the world and to deal with it according to its lights and its values. – Robert Oppenheimer, who led the development of the nuclear bomb.

We must assume that this technology, like all others ever invented, will be tamed and improved over time.

The Positive Side Of The Story

Over the last few years I have talked with hundreds of software companies that use AI for a wide variety of purposes. In every case their tools have made work easier, improved the efficiency of businesses, and made life better. Let me just cite a few examples.

Credit card companies can spot fraud and protect us from theft in a near real-time fashion (all done using AI). Insurance companies can price your accident claim through a photo, saving you days of effort. Noise-cancelling headphones make workouts fun and exciting (uses a neural network). New recruiting tools save companies millions of dollars selecting job candidates that don’t need college degrees to compete. And talent intelligence platforms suggest career advice, coaches, and education to help you grow and progress.

So Why Are We Afraid? It may be something different.

So why are people afraid? According to the Edelman Trust Barometer, scientists are the most trusted constituents in the world. I sense there’s something else going on.

As Edelman states, we live in a world that questions authority. We feel the CDC let us down when we got sick. We blame the Fed for high inflation and escalating interest rates. And rather than accept that society, as a whole, gets better over time, it feels like everything is getting worse. So AI, which we sense is being invented by a “group of experts we don’t trust,” falls into the category as one more thing that will get out of control.

Well count me as naive, but I see the arc of history here. This invention, like the development of nuclear fission, has the potential to do amazing things.

Perhaps we should listen to Bill Gates, who believes “The Age of AI” will bring spectacular improvements in healthcare, education, art, and business. Or watch Michael Bloomberg, who launced BloombergGPT, positioned as the largest neural network for finance ever created.

AI will provide benefits we can’t even imagine. Let’s just give it some time.

Stay tuned for another big announcement this week. The positive power of AI will become clearer every day.

Additional Information

New MIT Research Shows Spectacular Increase In White Collar Productivity From ChatGPT

Microsoft Launches OpenAI CoPilots For Dynamics Apps And The Enterprise.

Workday’s Response To AI and Machine Learning: Moving Faster Than Ever

Bill Gates Sees Generative AI As The Biggest Thing Since Windows

New York City AI Bias Law Charts New Territory for Employers

Understanding Chat-GPT, And Why It’s Even Bigger Than You Think (*updated)

What Is A Large Language Model (LLM) Anyway? (good overview)

Listen to Satya Nadella Describe Microsoft’s View of OpenAI

Dair Institute Experts Also Request Responsible AI